行銷人轉職爬蟲王實戰|5大社群+2大電商

1. Html網頁結構介紹-網頁到底如何傳送資料?爬蟲必學

2. 資料傳遞:Get與Post差異,網路封包傳送的差異

3. Html爬蟲Get教學-抓下Yahoo股票資訊,程式交易的第一步

4. Html爬蟲Get實戰-全台最大美食平台FoodPanda爬蟲,把熊貓抓回家

5. 資料分析實戰,熊貓FoodPanda熱門美食系列|看出地區最火料理種類

6. Json爬蟲教學-Google趨勢搜尋|掌握最火關鍵字

7. Json爬蟲實戰-24小時電商PChome爬蟲|雖然我不是個數學家但這聽起來很不錯吧

8. Html爬蟲Post教學-台灣股市資訊|網韭菜們的救星

9. Html爬蟲Post實戰-全球美食平台UberEat爬蟲

10. Pandas爬蟲教學-Yahoo股市爬蟲|不想再盯盤

11. Pandas爬蟲實戰-爬下全台各地區氣象預報歷史資料

12. 資料分析實戰-天氣預報圖像化|一張圖巧妙躲過雨季

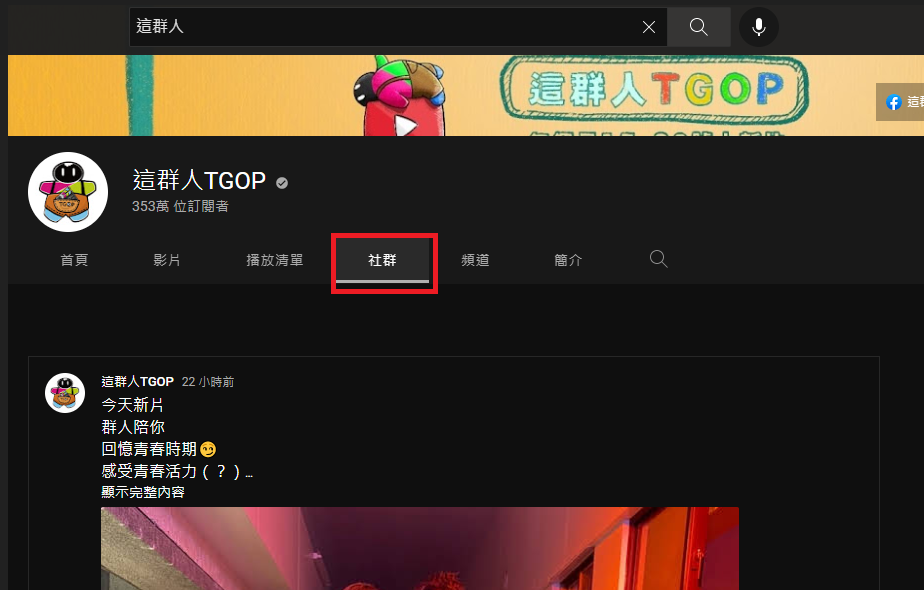

Youtubeç¬è²ï¼ç¤¾ç¾¤è³æï½ç¨Pythonæ¾å°Youtuberç¶çç²çµ²çç§å¯ãéç¨å¼ç¢¼ã

å¨åä¸å 課ç¨ãYoutubeç¬è²ï¼é »éè³æï½Youtuberç¶²ç´ æ代ä¸å¯æ缺çPythonæè½ãéç¨å¼ç¢¼ããç¶ä¸ï¼æåå°æ¯åYoutubeé »éç¬ä¸ä¾ï¼æåæ延çºä¸å 課ççµæé²è¡ç¬è²ã

1. å¯äº¤ä»ææ

æ¬æ¬¡æå¸ææ¨å¦ä½ä½¿ç¨Python çSeleniumå¥ä»¶ï¼ç¬ä¸ååYoutubeé »éä¸ç社群è³æï¼å ¶ä¸å å«Youtubeé »éè²¼æçé »éå稱ãé »é網åãæç« é£çµãæç« å §å®¹ãç¼ææéãæè®æ¸ãçè¨æ¸éãçè¨å §å®¹ã並å°çµæå²åæCSVæªæ¡ï¼ä¹å¾ä¹å¯ä»¥ç´æ¥ç¨Excelç´æ¥è§çæ編輯åï¼

2. Selenium ç»å ¥Youtube

è¥æ¨éä¸ç¥éSelenium æ¯ä»éº¼æ±è¥¿ï¼å¯ä»¥åèãSeleniumä»ç´¹ï½Pythonç¬åæ 網é çå©å¨ãï¼ä¸¦ä¸ä¸å®è¦å å°Selenium éè¦ç¨å°çç°å¢äºå è¨å®å¥½ï¼è¥ä¸ç¥éå¦ä½è¨å®å¯ä»¥åèãSeleniumç°å¢è¨å®è測試ï½æææææ¨å¦ä½è¨å® phantomjsè chromedriverãã

# è¨å®åºæ¬åæ¸

desired_capabilities = DesiredCapabilities.PHANTOMJS.copy()

#æ¤èå¿

é ææèªå·±é»è

¦çUser-Agent

desired_capabilities['phantomjs.page.customHeaders.User-Agent'] = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.100 Safari/537.36'

# PhantomJS driver è·¯å¾ ä¼¼ä¹åªè½çµå°è·¯å¾

= webdriver.PhantomJS(executable_path = 'phantomjs', desired_capabilities=desired_capabilities)

# éééç¥æé

chrome_options = webdriver.ChromeOptions()

prefs = {"profile.default_content_setting_values.notifications" : 2}

chrome_options.add_experimental_option("prefs",prefs)

# éåç覽å¨

driver = webdriver.Chrome('chromedriver',chrome_options=chrome_options)

time.sleep(5)

3. 滾åé é¢æ¹æ³

ç±æ¼Youtubeä¹æ¯å±¬æ¼åæ

çæç網é ï¼å æ¤éè¦å©ç¨ç¨å¼æ網é åä¸æ»¾åï¼æè½åå¾æ´å¤ç¶²é è³æã滾åçéç¨ä¸æä¸å°time.sleep() æ¹æ³ï¼å

¶åå å¨æ¼æ»¾åæçè³æè¼å

¥æéï¼è¥å çºç¶²è·¯å»¶é²å°è´è³æéæ²è¼å

¥ï¼ç¬è²å°±æå£æï¼é²èå°è´ç¨å¼åæ¢ï¼å æ¤éè¦ç¨å¾®å»¶é·æéã

ç±æ¼Youtubeä¹æ¯å±¬æ¼åæ

çæç網é ï¼å æ¤éè¦å©ç¨ç¨å¼æ網é åä¸æ»¾åï¼æè½åå¾æ´å¤ç¶²é è³æã滾åçéç¨ä¸æä¸å°time.sleep() æ¹æ³ï¼å

¶åå å¨æ¼æ»¾åæçè³æè¼å

¥æéï¼è¥å çºç¶²è·¯å»¶é²å°è´è³æéæ²è¼å

¥ï¼ç¬è²å°±æå£æï¼é²èå°è´ç¨å¼åæ¢ï¼å æ¤éè¦ç¨å¾®å»¶é·æéã

# 滾åé é¢

def scroll(driver, xpathText):

remenber = 0

doit = True

while doit:

driver.execute_script('window.scrollBy(0,4000)')

time.sleep(1)

element = driver.find_elements_by_xpath(xpathText) # æåæå®çæ¨ç±¤

if len(element) > remenber: # 檢æ¥æ»¾åå¾çæ¸éæç¡å¢å

remenber = len(element)

else: # æ²å¢å åçå¾

ä¸ä¸ï¼ç¶å¾å¨æ»¾åä¸æ¬¡

time.sleep(2)

driver.execute_script('window.scrollBy(0,4000)')

time.sleep(1)

element = driver.find_elements_by_xpath(xpathText) # æåæå®çæ¨ç±¤

if len(element) > remenber: # 檢æ¥æ»¾åå¾çæ¸éæç¡å¢å

remenber = len(element)

else:

doit = False # éæ¯ç¡å¢å ï¼åæ¢æ»¾å

time.sleep(1)

return element #åå³å

ç´ å

§å®¹

4. æºåç®æ¨é »é

æ¬æ¬¡çç¬è²æå©ç¨å°åé¢ç課ç¨ãYoutubeç¬è²ï¼é »éè³æï½Youtuberç¶²ç´ æ代ä¸å¯æ缺çPythonæè½ãéç¨å¼ç¢¼ããæåå¾çCSVæªæ¡ï¼å æ¤è¥éæ²çéåé¢ç課ç¨ï¼è¶å¿«æåé»æåï¼

#æåYoutuber_é »éè³æ.csv

getdata = pd.read_csv('Youtuber_é »éè³æ.csv', encoding = 'utf-8-sig')

#æºå容å¨

youtuberChannel = []

channelLink = []

articleLink = []

articleContent = []

postTime = []

good = []

commentNum = []

comment = []

é次æ¯å°æ¯åYoutubeé »éç社群ç¶ä¸ç¬è²ï¼æ¯åYoutubeé »éææä¸ç¾åæç« ï¼å æ¤éè¦ç¨for è¿´å大éå·è¡ï¼ä¹æ¯ç¸ç¶çèæã

é次æ¯å°æ¯åYoutubeé »éç社群ç¶ä¸ç¬è²ï¼æ¯åYoutubeé »éææä¸ç¾åæç« ï¼å æ¤éè¦ç¨for è¿´å大éå·è¡ï¼ä¹æ¯ç¸ç¶çèæã

# éå§ä¸åä¸åç¬è²

for yName, yChannel in zip(getdata['Youtuberé »éå稱'], getdata['é »é網å']):

#å°ç¤¾ç¾¤é é¢

driver.get(str(yChannel) + '/community')

time.sleep(10)

# 滾åé é¢

getAll_url = scroll(driver, '//yt-formatted-string[@id="published-time-text"]/a')

5. ç¬åæææç«

æè¨è¨å ©å±¤çfor è¿´ååå å¨æ¼ï¼ç¬¬ä¸å±¤æ¯å å°Youtubeé »éç社群ä¸ï¼ææ¯åæç« ç網åç¬ä¸ä¾ï¼ç¬¬äºå±¤for åååæ¯ä¸åå網åé²å»ï¼å¯ä»¥ç¬ä¸æç« å §ç詳細è³æï¼ä¾å¦ï¼æç« å §å®¹ãç¼ææéãçè¨ãæè®æ¸ãçè¨æ¸éã

# æç« ç¶²åå¿

é å

æ·ååºä¾

for article in getAll_url:

articleLink.append(article.get_attribute('href')) # åå¾æç« é£çµ

postTime.append(article.text) # åå¾ç¼ææé

youtuberChannel.append(yName)

channelLink.append(yChannel)

print('é »é'+ str(yName) + 'å

±æ'+ str(len(articleLink)) + 'ç¯æç« ï¼éå§æåæç« å

§å®¹')

for goto_url in tqdm(articleLink):

# å»å°è©²æç«

driver.get(goto_url)

time.sleep(3)

6. 解æçè¨è³æ

é¦å ï¼å æååºæ¬çæç« å §å®¹ãæç« è®æ¸ãçè¨æ¸éï¼éäºé½æ¯åªè¦æ¾å°Htmlæ¨ç±¤å³å¯åå¾ï¼ä¸éè¦ç¹å¥å»æ»¾åé é¢åæ ç¬åã

# åå¾æç« å

§æ

good.append(driver.find_element_by_id('vote-count-middle').text) # åå¾æç« è®æ¸

time.sleep(3)

# åå¾çè¨ç¸½æ¸é

getcommentNum = int(driver.find_element_by_xpath('//h2[@id="count"]/yt-formatted-string/span').text)

commentNum.append(getcommentNum)

time.sleep(3)

æ¥èå°±æ¯æ¯è¼è¤éçé¨ä»½ï¼éè¦ä¸ç´æ»¾åç«é¢ï¼æè½ç¢çæ´ä¹ ä¹åççè¨ï¼ä»¥å©æ¼æå°çè¨å §å®¹ãç¼è¨è ãæè®æ¸ãçè¨æéçã

#--- éå§é²è¡ãåå¾çè¨ãå·¥ç¨

# 滾åé é¢

getcomment = scroll(driver, '//div[@id="main"]')

getfans = driver.find_elements_by_id('author-text') # ç¼è¨è

# å²åçè¨å

§å®¹

commentMan = []

manChannel = []

post_time = []

comment_content = []

comment_good = []

count = 0 # ç¨ä¾ç·¨èçè¨

containar = {}

for fans, com in zip(getfans, getcomment):

if count != 0: # 第ä¸æ¬¡ä¸éè¦å·è¡ï¼å çºæ¯youterèªå·±çè³æ

getcom = com.text

getcom = getcom.replace('\nåè¦','')

cutcom = getcom.split('\n')

if len(cutcom) == 3: # è¥æ²æ人æè®ï¼åè£0

cutcom.append(0)

try:

containar['çè¨'+str(count)] = {

'ç¼è¨è

':cutcom[0],

'ç¼è¨è

é »é': fans.get_attribute('href'),

'ç¼è¨æé':cutcom[1],

'ç¼è¨å

§å®¹':cutcom[2],

'è®æ¸':cutcom[3]

}

except:# 碰å°ç°å¸¸è³æä¹æ¥µç«¯èç

containar['çè¨'+str(count)] = {'è³æç°å¸¸'}

count = count + 1

comment.append(containar) # å²åææçè¨

作者:楊超霆 行銷搬進大程式 創辦人